How can Copilot help us create a better workplace user experience?

5 minute read

We've been exploring Microsoft's new AI chatbot Copilot, as part of our efforts to evaluate our workplace tools, identify opportunities to support individuals in their work and explore where accessibility issues are present.

Recently we’ve been taking a more in-depth look at the workplace tools we use, and how they can help us create more inclusive ways of working. Inclusive colleague experience is often overlooked, as people see usability and accessibility as domains for B2C or citizen-focused products and services.

But we all spend a lot of our time at work, using digital products that could be better. We also have an access to work challenge if we neglect to build, or buy, platforms that help people, rather than hinder or harm. This applies from recruitment, through on-boarding to the workplace tools we use every day to meet, communicate and collaborate.

But we all spend a lot of our time at work, using digital products that could be better. We also have an access to work challenge if we neglect to build, or buy, platforms that help people, rather than hinder or harm. This applies from recruitment, through on-boarding to the workplace tools we use every day to meet, communicate and collaborate.

There are certain tools which are now widely accepted as integral to the research and design process, such as whiteboard tools like Miro and Figma. But these come with their own set of accessibility issues, and we’re keen to explore where there might be better alternatives or options for integrations to support users with access needs.

In the past year, we’ve started the ‘Access at Nexer’ Employee Resource Group (ERG), which is a disability discovery and focus group, with a mission to understand and address barriers that may prevent individuals from gaining access to tools, processes and ways of working that will benefit the entire Nexer community. Over the next few months, this Group plans to take a closer look at the tools we’re making use of, to identify opportunities to support individuals in their work and explore where accessibility issues are present within these tools.

As a wider organisation, at Nexer Group we use the Microsoft 365 ecosystem including Teams, SharePoint and OneNote, as well as the standard Office suite. This also includes some of the newer developments from Microsoft, including the public introduction of the large language model chatbot Copilot in the Autumn of 2023.

In setting the scene for this exploration, we met with some of our team about how they’re currently using accessibility tools within Microsoft and Teams. Some made use of tools like Magnifier, closed captions, transcripts and colour contrast tools to tailor their experiences, while others used keyboard shortcuts and styles to ensure consistency. We have seen before there can be clashes in access needs, for example a team member who relies on captioning often needs mouth patterns and facial expressions to aid the experience, while a team member in the same meeting might prefer cameras to be off in a meeting for a number of reasons. A good team will explore these clashes together, and gain an understanding of competing experiences.

Other features like sharing PowerPoints live in calls, instead of screensharing are something we’ve increasingly been exploring for a more inclusive experience too. Finally, we’ve been taking a closer look at how Copilot can be utilised alongside these tools to support users with different access needs.

This series of blog posts will explore what we’ve learned along the way.

What is Copilot?

An important question, and who better to answer than Copilot itself:

“Microsoft Copilot is an AI companion designed to assist users by providing information, answering questions, and engaging in conversation. Copilot is integrated with Microsoft Teams to enhance productivity during meetings. It can summarise key discussion points, identify areas of agreement or disagreement, and suggest action items in real-time. It can also generate meeting notes, list action items, and suggest follow-up questions.”

Testing Copilot’s functionality

Over the past few weeks we’ve spent some time getting acquainted with Copilot, both in browser and Teams, and exploring its many use cases.

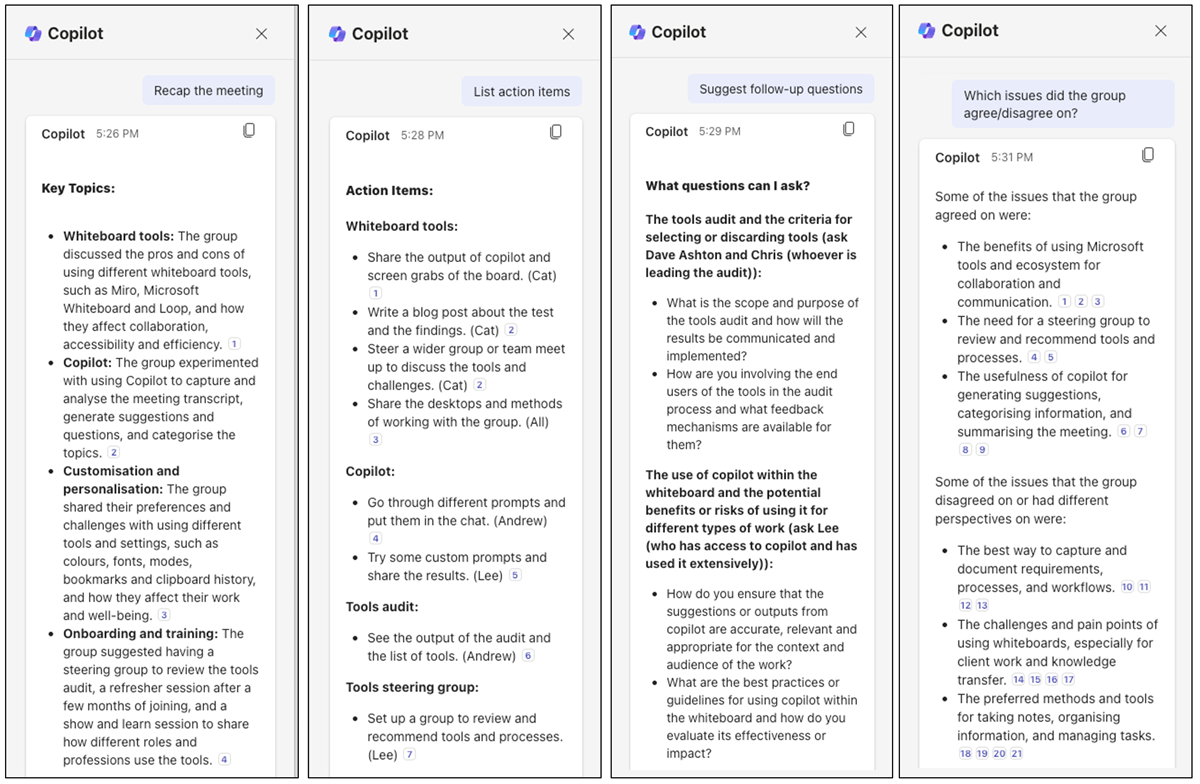

A particular standout feature is Copilot’s ability to collect and present insights from meetings based on instructions from the user. This could include summarising key topics, actions to follow up on, suggested questions to ask, and an overview of different points of view expressed in the meeting, with points of agreement and disagreement highlighted.

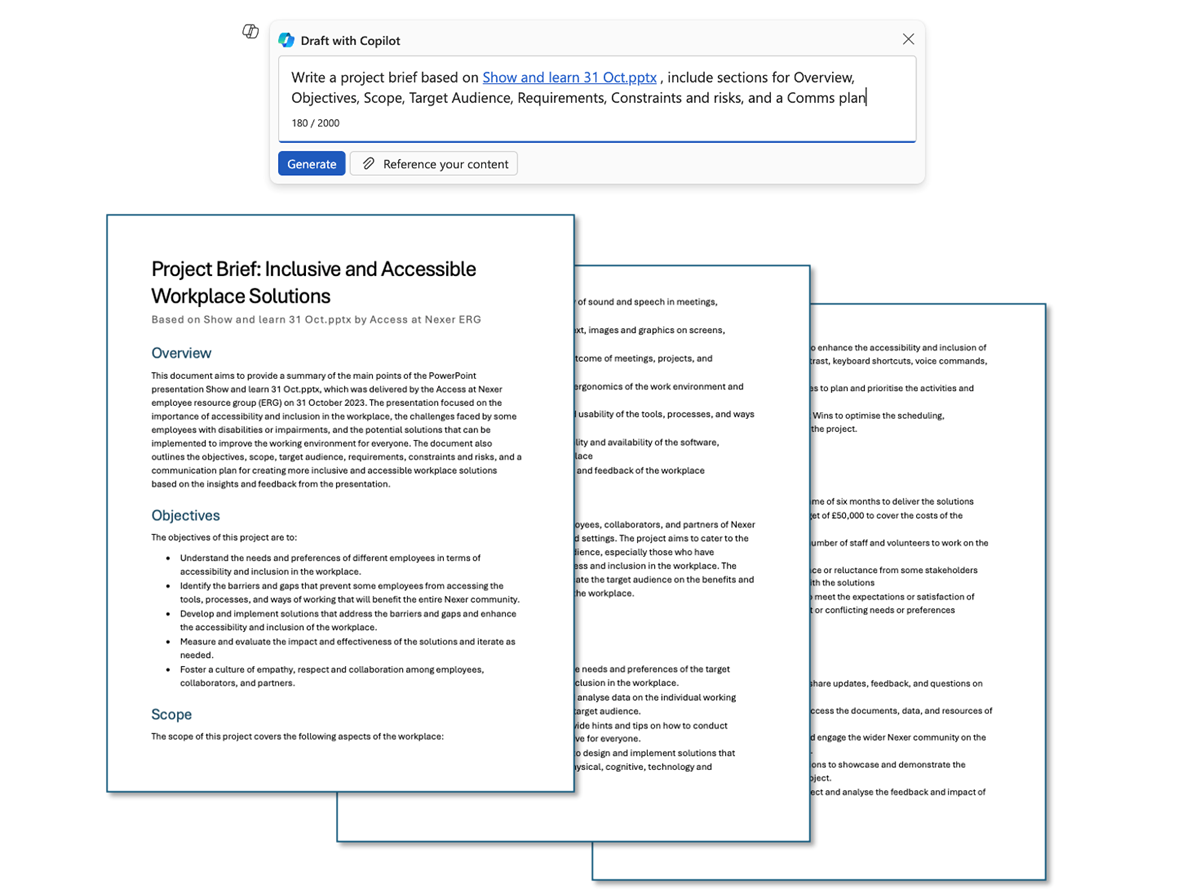

We also instructed Copilot to create a first draft of a project kick-off document. This needed to contain enough information for the team to understand the objective, reasons, deliverables, and next steps. Copilot can also extract information from across Teams and Outlook, offering a helpful summary of any missed discussions, action points or follow-ups.

Finally, we ran a workshop, to explore another tool in the Microsoft Suite, Whiteboard, and explore how it compares to other competitors like Miro (the outcomes of which we’ll share in another post). It was a great opportunity to see how Copilot would fare with more than two participants in a call and handle our various chats.

Here’s how Copilot summarised our session:

Here’s how Copilot summarised our session:

Using Microsoft Whiteboard in Teams: The group tested the functionality and accessibility of the whiteboard tool and compared it to Miro, which they currently use for various purposes. They found some benefits of having the whiteboard integrated in Teams, such as easier collaboration, document sharing, and pinning. They also discussed some challenges and pain points of using whiteboards in general, such as noise, context, and digital inclusion.

Using Microsoft Loop: Andrew demonstrated how he uses Loop to create and share dynamic components, such as notes, lists, tables, and kanban boards, across different platforms and locations. He explained how Loop updates in real time and can be embedded in documents, emails, chats, and channels. The group expressed interest and curiosity about Loop and how it could improve their workflows and information management.

Using Microsoft Copilot: The group used Copilot to transcribe and analyse their conversation, as well as to generate suggestions and questions based on their inputs. They explored how Copilot could help them capture and categorise information, document workflows, collate flowcharts, and identify actions and unresolved issues. They also discussed the potential and limitations of Copilot, such as its learning capabilities, data sources, and accuracy.

Next steps and actions: The group agreed to write a blog post about their test and findings, and to organise a wider team meeting to share their insights and experiences with the tools. They also suggested forming a steering group to evaluate and recommend the best tools and processes for the organisation, and to provide tailored and ongoing training and support for the staff.

Benefits for accessibility

Note capture: By capturing and noting information in meetings, Copilot simplifies what for many users can be a time-consuming or near impossible task. When discussing the tools we use, Molly noted that while transcripts, captioning, and Magnify can all be helpful, inside meetings they can have downsides, such as zooming in on text obscuring the rest of the call, and transcripts missing detail about which attendee is speaking. By automating the process, Copilot can help to mitigate some of these issues. It also reduces the cognitive load for users, allowing them to direct their attention to the meeting instead of switching back and forth while taking notes

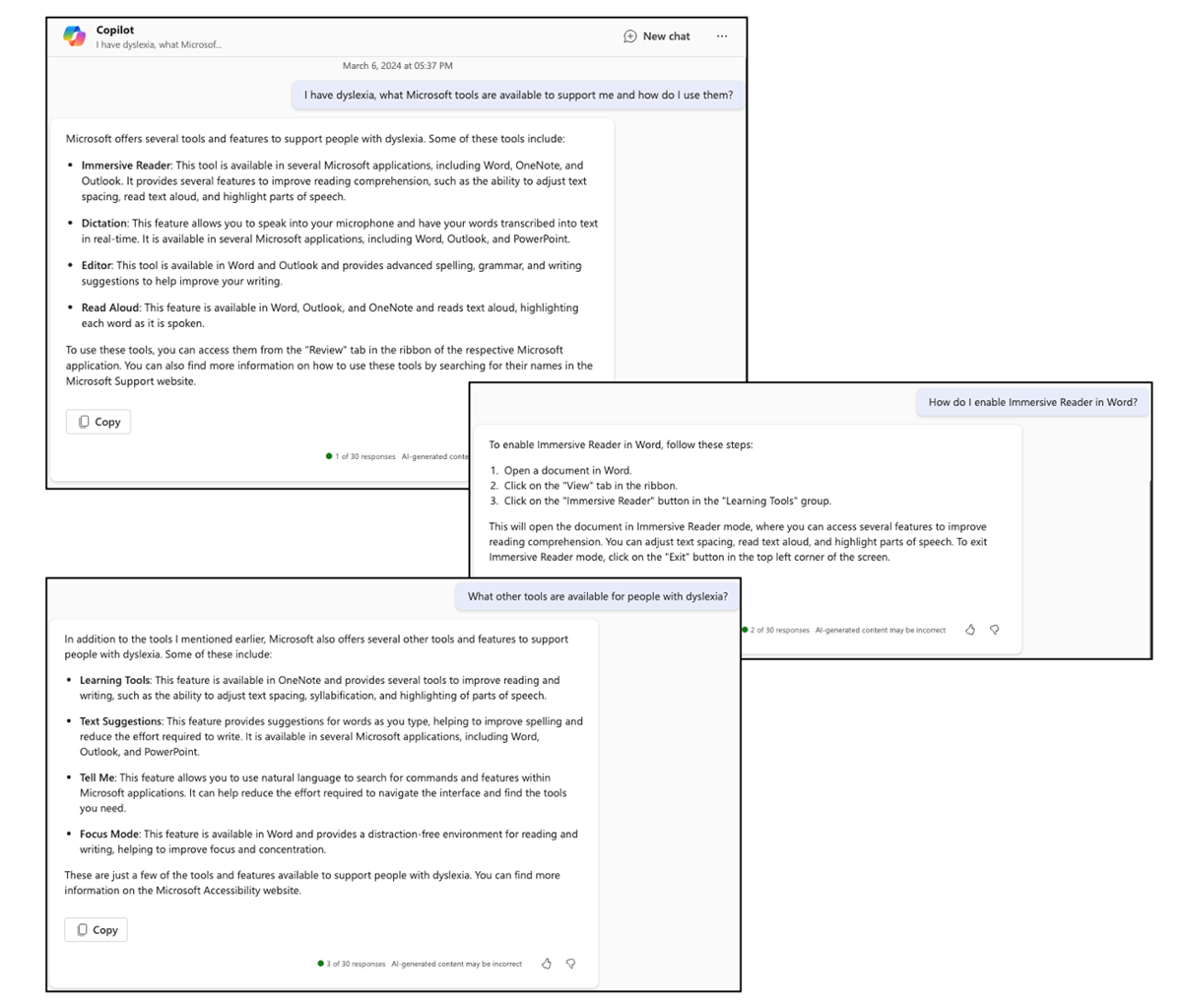

Searching within web and Microsoft 365: Similarly, Copilot can simplify the process of seeking information, particularly within Teams, where it can scan emails and channels to synthesise content. We tested this out with things like HR policies for booking holidays or instructing Copilot to give us a high-level summary of what we’d missed over time off. It can also direct users to the many accessibility options and tools available within Microsoft 365, some of which we mentioned earlier.

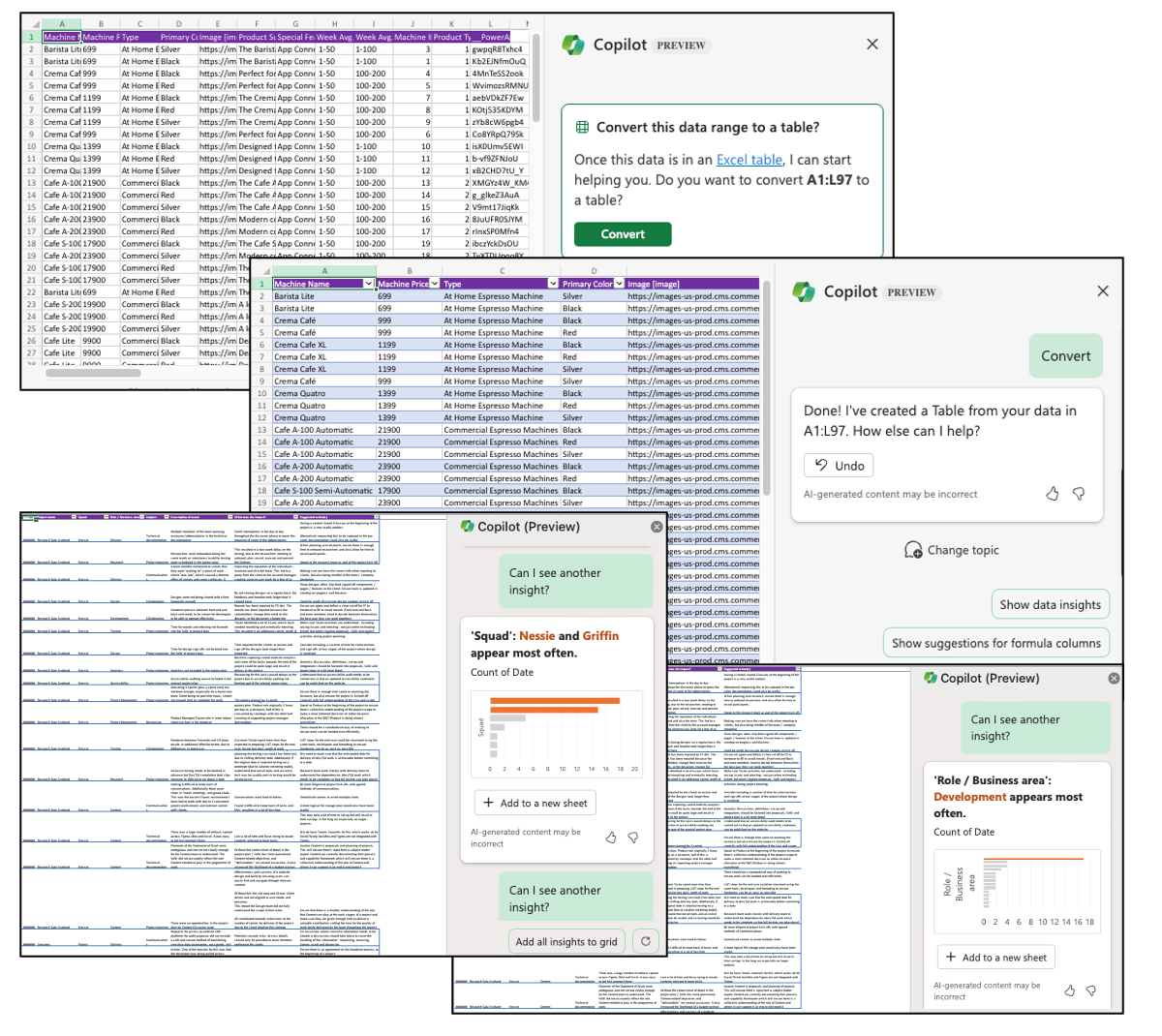

Spreadsheets: When we tested Copilot in Excel, we found that it could convert data into tables, and offer interpretations of the data itself. This can help to address some of the barriers spreadsheets can present to users, such as incompatibility with screen readers, lack of context in the presentation of data, and the visual elements often used as identifiers.

Voice activation: Copilot has voice access built-in, which can be used to ask questions, or give instructions, for example, writing prompts within applications like Word. We're also currently experimenting with voice profiles, to assign notes to people, which can help in group situations where several people share one mic.

Here's a video from Microsoft on how AI is opening up new accessibility experiences

Here's a video from Microsoft on how AI is opening up new accessibility experiences

What hasn’t worked for us (yet)

In our Copilot tests we’ve encountered a couple of areas where it doesn’t quite succeed or work as we imagined. One was in a call where Copilot used the wrong gender in reference to a colleague. We found it surprising that Copilot would assume a gender at all, with the assumption being it would just default to gender neutral language and use participant’s names. It also couldn’t determine the difference between two different participants with the same name in a call, which could cause confusion in any follow up notes or transcripts.

We also encountered some inconsistent results, which highlighted the need for understanding, both in terms of training for those using Copilot, and Copilot itself, and an iterative approach to implementation.

There’s still plenty we plan to explore as part of our wider work through the Access at Nexer ERG and our ways of working. Copilot is just one small piece of the puzzle, but the potential is exciting. We’re also cognizant of the fact that the tool is still in development, with plenty of new updates to be released over the coming months, so stay tuned for more updates.

Look out for a guest blog in this series from our friends at Purify too, who specialise in the physical and technical set up of Teams meeting rooms and improving the Teams telephony experience.